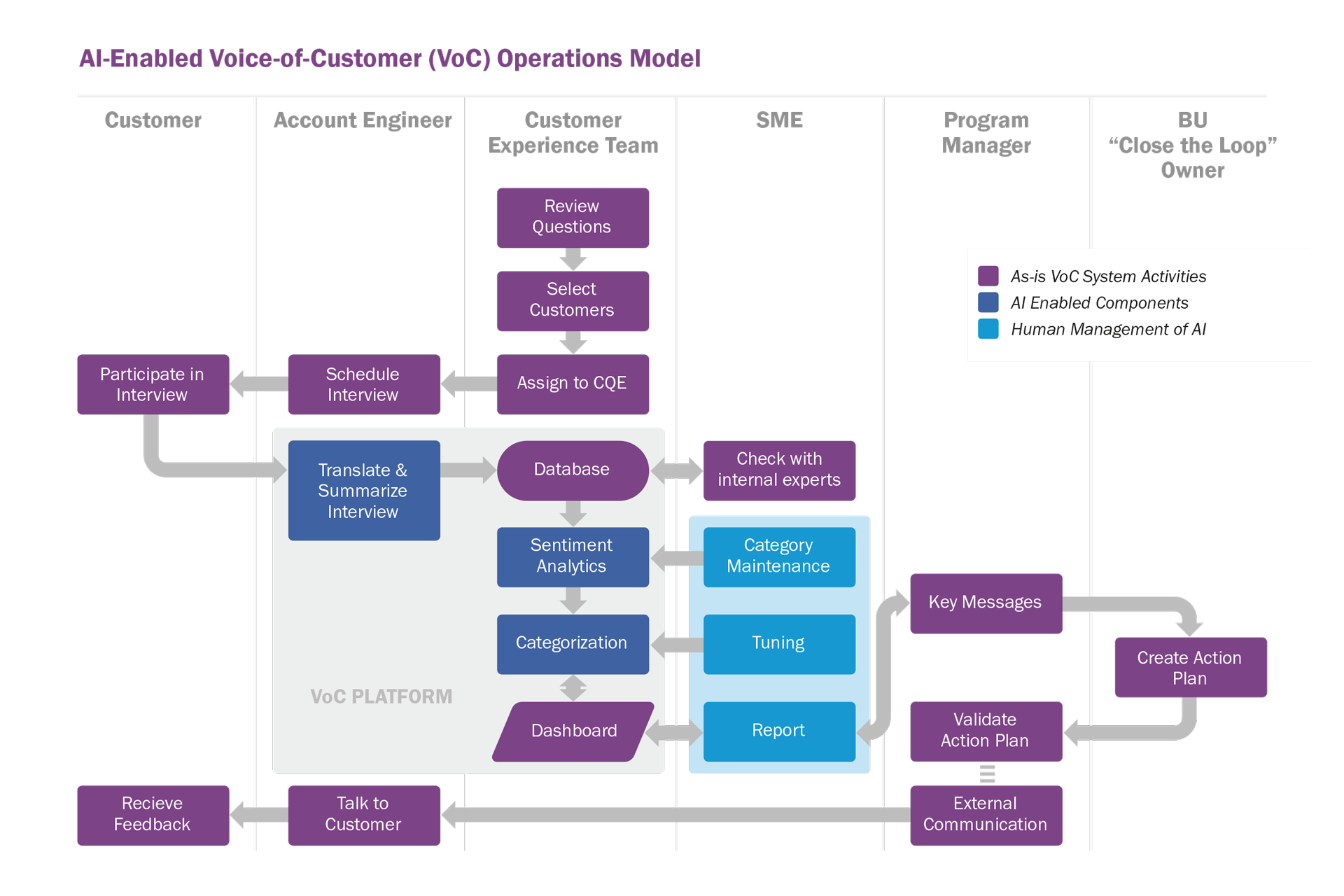

Supercharging a VoC Program with AI helps it handle higher volumes and perform quicker analysis, without increasing team size.

Our client—a Quality Assurance group’s VoC team—had investigated the “state of the possible” with Artificial Intelligence prior to the pandemic. Since then, reports and success stories about machine learning (ML) and AI capabilities using newer technologies and algorithms had been proliferating. Even within the client’s organization, their use of AI (and acceptance of its benefits) were becoming more widely accepted.

The QA team approached McorpCX with a host of unique challenges:

Since the purpose of this engagement was to assess the art of the possible and provide insights into the feasibility of different solutions, we designed a systematic study of platform capability with respect to NLP that included: sentiment analysis, categorization into topics, and discovering insights (clustering).

The study leveraged large language models such as BERT (Google) and GPT-3 (openAI). We also selected some newer low-code AI platforms being brought to market by a host of startups. As the client was already using Qualtrics we also evaluated Qualtrics Discover (previously Clarabridge—one of the most highly rated NLP engines by Gartner).

We computed scores at three points in the process: A “Raw Score” was computed when training data was processed by the platform. The second score was computed once we tuned the systems, and a third score was computed once test data was processed. We then introduced a fourth “Final Score,” which was computed after another round of time-boxed tuning of the platform to understand how tunable the platform was in light of the “data drift” inherent in operational circumstances.

Our three areas of analysis included:

We’ve seen how well AI can enhance VoC programs when used to augment human capabilities. Just don’t underestimate operational aspects; unlike previous technologies, AI requires SMEs to actively maintain, evaluate and tune the solution.

” – Chirag Gandhi, Chief Technology Officer, McorpCX

Assessing the capabilities of three platforms, we eliminated both the low-code platforms and the large language models. We found that their day-to-day operations and maintenance would require a higher level of capacity than the client could justify sustaining over time, and therefore they failed the test of being independent enough of the organization’s AI team.

Additionally, integrating and sustaining these tools into the larger organization ecosystem would require an ongoing coding capability best served by external skilled resources, which the client made clear at the outset, was not their desired outcome.

We also found that (unsurprisingly), due to the specialized nature of the client’s content and language usage in the domain, the ability to fine tune the out-of-the-box models was another important hurdle. By way of example: In normal speech, “fault” is considered negative, however in the quality assurance domain, “fault” is used to indicate count, and therefore cannot be considered negative unless accompanied by a verb such as “increase.” So it became an important marker for any tool to provide extremely user-friendly analytical and tuning capabilities.

One benefit of our analysis was that we identified and demonstrated some inconsistencies in their current methodologies introduced by human classification and judgment. While the data was insufficient to determine whether this inconsistency was due to bias or noise, it was clear from our discussions that the team hadn’t recognized their current inconsistencies prior to this review.

Most importantly, our quantifications of precision, recall, F1 and accuracy proved that AI has come a long way, and it is now possible to obtain results that justify the investments in AI for NLP-based analysis of verbatims in a VoC program.

NLP implementations are not like a typical tool implementation, in which you provide the requirements and the technical team provides a fully configured tool that requires occasional break-fixes and/or enhancements. NLP implementations are highly dependent on the data they process. And the reality is, that data tends to change over time because the usage of terms changes or the topics being discussed change.

To evaluate how effective it would be to manage this, the VoC Program needs to consider both the work required to continuously tune the platform and handle exceptions, as well as the capability that the program would need to perform these tasks. In other words, does the value provided by the automation adequately offset the additional operational burden? When the answer is “yes,” then we found NLP could be a great benefit.

For any VoC leader looking for an edge, AI is here and ready to augment human capabilities. The three primary ways to leverage the advances in NLP to augment VoC work include:

While premature as a fully “hands off” approach, we found recent AI-driven NLP advances to be significant. And this project effectively demonstrated the applicability of NLP for open-ended VoC questions, providing the operational benefits of improved speed and consistency that—in the right circumstances—can more than offset the personnel lift.

McorpCX is independently recognized as a top customer experience services and solutions company, enabling and guiding leading organizations since 2002.

Touchpoint Mapping®, Touchpoint Metrics® and Loyalty Mapping® are registered trademarks of McorpCX, LLC